Chess@home: Building the largest Chess AI ever

Update: We’ve won Node Knockout in the “Completeness” category ! Congrats to the team!

How we got there

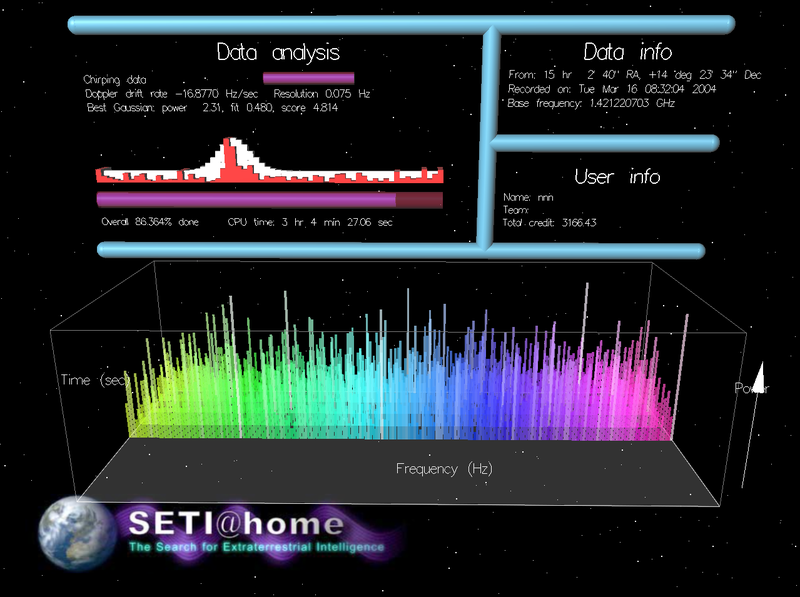

Many people are familiar with the

SETI@home project: a very large scale

effort to search for patterns from alien civilizations in the ocean of

data we receive from the sky, using the computing power of millions of

computers around the globe (“the grid”).

Many people are familiar with the

SETI@home project: a very large scale

effort to search for patterns from alien civilizations in the ocean of

data we receive from the sky, using the computing power of millions of

computers around the globe (“the grid”).

SETI@home has been a success, obviously not in finding aliens, but in demonstrating the potential of large-scale distributed computing. Projects like BOINC have been expanding this effort to other fields like biology, medicine and physics.

Last weekend, a team at Joshfire (Thomas, Nathan, Mickael and myself) participated in a 48-hour coding contest called Node Knockout. Rules were simple: code the most amazing thing you can in the weekend, as long as it uses server-side JavaScript.

JavaScript is an old but exciting technology, currently undergoing a major revival through performance breakthroughs, server-side engines, and an array of new possibilities offered by HTML5. It is also the language that has the biggest scale. Any computer connected to the web can run your JavaScript code extremely easily, just by typing an URL in a browser or running a script in a command line.

We decided to exploit this scale, together with Node’s high I/O performance, to build a large-scale distributed computing project that people could join just by visiting a web page. Searching for aliens was quickly eliminated as we didn’t have our own antenna array available at the time. So we went with a somewhat easier problem: Chess.

None of us is a particularly skilled Chess player. But as we discovered that there was an entry in the Guinness World Records for largest networked Chess AI at 2070 nodes, we realized it was definitely something we could beat. The Chess@home project was born!

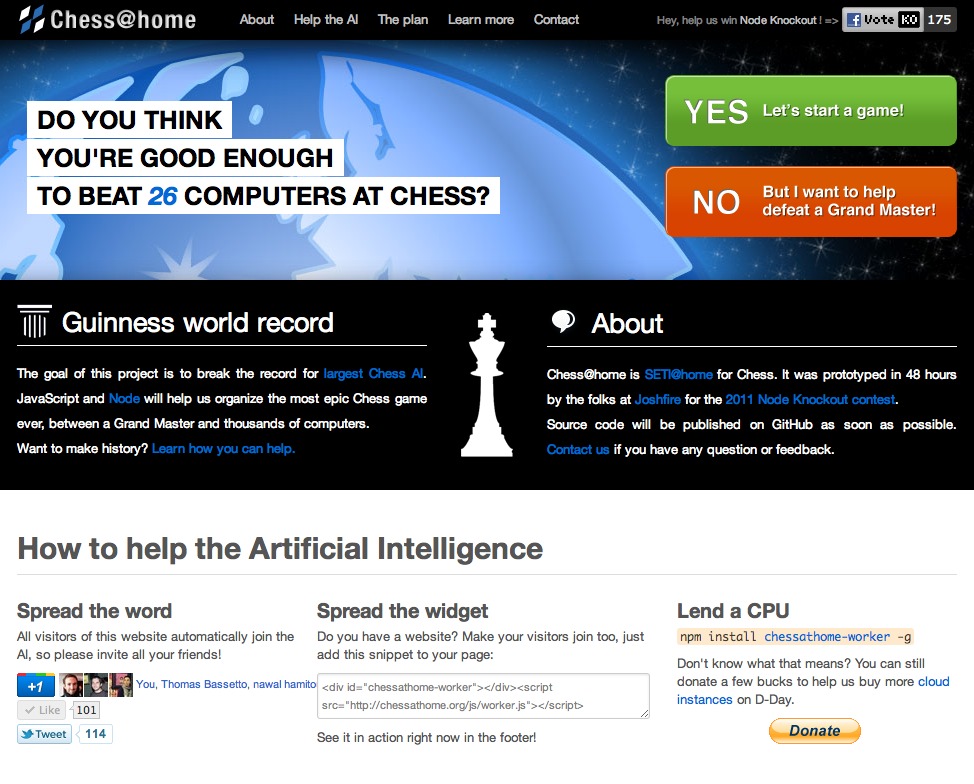

48 hours later, the prototype still had a few bugs but was in a very playable state and looked like this:

Challenges of a distributed Chess AI

As we started doing research, we identified several challenging areas:

Latency. We wanted to follow standard tournament rules (~90min games) which meant each move should be computed in about a minute on average. Moreover, players on the web would prefer even shorter response times so we had to aim as low as we could.

This short compute window wouldn’t play well with most existing distributed computing infrastructures which typically optimize for throughput. We had to come up with a new, almost real-time infrastructure. Websockets would definitely be a part of the solution.

Parallelism. Optimizing for latency means sending smaller amounts of computation to each worker. So we had to find a simple way to divide the computation for each move into thousand of smaller pieces.

Without going too deeply in the specifics, the minimax algorithm used in most Chess AI relies on a tree walk which is inherently sequential when you want to do alpha-beta pruning. We researched various algorithms that would be suited to parallelism keeping the following in mind:

- The workers shouldn’t rely too much on shared variables because websockets don’t work from client to client (going through a server wouldn’t scale).

- The work for each move should be divisible in thousands of smaller units.

We found two potential matches : APHID (simple, used in ChessBrain.net, current holder of the Guinness Record) and variants of YBWC (more complex but more efficient). Given the 48h coding limit, we chose to go with APHID for the time being.

JavaScript performance. State-of-the-art Chess AIs are able to scan more than 15 million nodes per second (NPS) on today’s high-end hardware. GarboChess, the open source JavaScript Chess AI we forked, had performances around 100k NPS under Chrome with a modern Macbook Pro. This 150x factor didn’t surprise us that much and in our 48 hours we decided not to invest time into profiling and optimizing this code which was already capable of beating us on only one machine anyway ;-)

For reference, it is said it took 200mm NPS for Deep Blue to beat Kasparov, which would be theoretically achieved in our 2070-node goal.

Fault tolerance / Security. Running JavaScript code on third-party clients means they can’t be trusted, both for availability (the users can leave the page at any time) and for reliability (malicious clients could send wrong scores and make the AI play dumb moves).

Reliability was ruled out-of-scope in the initial run but availability seemed important if we wanted the algorithm to finish on time without missing obvious paths in the decision tree. We settled on a FIFO queue for the work units stored in a MongoDB server. We set a timeout of 5 seconds on each work unit so that a second worker could grab the unit and recompute it if the first worker didn’t send anything back.

Our 48h prototype implementation

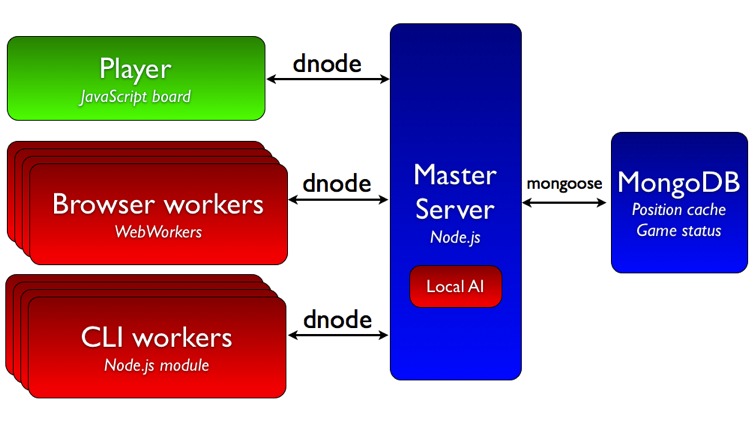

Here is an overview of the prototype design:

Let’s focus on some key components:

- dnode. A Node.js library for asynchronous bidirectional remote method invocation. It provides network socket and websocket-style transports (via socket.io) so that system processes can communicate with each other and with processes running in browsers using the same interface. We used it for nearly all network interactions.

- Web Workers. JavaScript is a single-threaded environment, meaning multiple scripts cannot run at the same time without risking a UI freeze. Web Workers allow us to fire up long-running scripts to handle computationally intensive tasks, but without blocking the UI or other scripts to handle user interactions. On the server-side we used node-webworker (a Web Workers implementation for Node) so that we had only one interface to talk with the AI.

- MongoDB. MongoHQ was a sponsor of Node Knockout and we decided to use their turn-key MongoDB instances for storing game states and the potentially huge position cache.

- Node. Central to the architecture, Node allows both fast development (sharing the same code on client and server) and high performance (its non-blocking I/O paradigm has been proved to provide excellent concurrency and throughput).

- Local AI on master. This will probably not scale but we still make a few calls to the AI on the server, mainly because APHID needs to generate a small tree first to divide the work between the clients. It may be removed in the future.

Future improvements will be made on this implementation, remember that it was all done in 48 hours. But launching the AI inside Web Workers, both in browsers and servers will remain the basic design idea.

What lies ahead

Widget ethics. Using third-party processing power has usually been opt-in: people needed to install SETI@home or the BOINC software to lend their CPUs. JavaScript allows us to use people’s CPUs without their explicit consent just when they visit a page with the widget, which makes this a grey area.

We decided to allow people to opt-out with a checkbox but widget adoption on third-party websites remains a major challenge towards the 2070-node goal and we will do our best to answer any complaints or objections people might have.

For instance, as we use “public” resources, we definitely want to keep the result as open as we can. The code will remain open source on GitHub (code release this week). We might also open an API to the Chess AI.

AI improvements. After smashing the few remaining UI bugs, we’ll continue optimizing the AI in several areas:

- Better parallelism: We plan to switch from APHID to YBWC to have a more elastic work split between workers. This is critical given the low performance of JavaScript: we must compensate it by very efficient scaling.

- Unit tests: We’ve already unit-tested a couple hundred positions but there are a lot more datasets available on the web that we should integrate like STS.

- Algorithmic improvements: GarboChess already implemented alpha/beta pruning, null-window searches, quiescence search, and a few other features but fine-tuning the evaluation function and adding more tweaks to the AI should make it play better in more situations.

Planning for D-Day. When the AI is strong enough, we’ll invite an actual french Chess Grand Master along with a Guinness official for the most epic Chess game, ever!

We need to coordinate for the maximum of people to join the compute grid at the same time. Installing the widget on a few high-traffic websites could do the trick. We also want people to be able to watch the game on the Chess@home page so that they also join the grid.

If you have other ideas on how to get the maximum number of people connected, please share them! We’re also looking for talented JavaScript developers to help in all the fields described above.

If you want to help you can also have a look at the current list of issues and try to find new ones on Chess@home. Good luck beating the Grid!